Test data management

Written by Maarten Urbach

There’s an ever-growing need for software development to be better, faster, and cheaper. With the rising end-user demand and unrelenting competition, implementing a proper testing strategy is critical. An effective testing strategy includes a range of components, including Test Data Management (TDM).

Test data management is one of the big challenges we face in software development and QA. It is important that test data is highly available, easy and quick to refresh, and compliant with privacy laws and legislation. This improves the quality and ultimately the time to market of your software.

Let’s discover how to manage your test data!

What is test data management?

First, let us have a unified understanding of the term: TDM is creating, managing, and provisioning realistic test data for non-production reasons, like training, testing, development, or QA. It ensures that the testing teams get test data of the right quality in a suitable quantity, proper environment, correct format, and appropriate time. In other words:

“The right test data in the right place at the right time”

Test data management involves a number of activities, including identifying and selecting the appropriate data for testing, preparing the data for use in testing, and managing and storing the data throughout the testing process. The goal of test data management is to ensure that the data used for testing is accurate, relevant, and up-to-date, and that it properly reflects the real-world conditions in which the software or system will be used. This can help to improve the quality of testing, and ultimately the quality of the software or system being tested.

The need for proper test data

Every developer knows that you must first test any new product to determine if it lives up to expectations, instead of tarnishing the company’s reputation by releasing unstable software. For this reason, test drivers perform an endless number of laps driving new concept cars, just as software testers evaluate the latest versions of new applications.

In order for an application to work, it requires fuel, much like a car does. An application is designed for processing information or data. Without data, there can be no processing. This is why test data is essential: it serves as the fuel for an application in a test environment. In the past, test data was limited to a few sample input files or a few rows of data in the database. Today, companies rely on robust sets of test data with unique combinations to achieve high coverage in their testing efforts.

The attention for test data, however, is surprisingly low, as the tester will gather the data as needed to suit the test cases for execution. The application is in a test environment, and in that particular environment, data is present, which means the tests can be executed. If only it were as simple as that…

To draw a final comparison with a car: if you pour diesel into a petrol-powered car, you probably won’t get very far. Moreover, if you are unaware of this specific mistake, you might end up disassembling the engine in an attempt to figure out why it’s not functioning properly, only to discover that the issue was caused by the wrong fuel. Test data can be quite similar to this scenario. To accurately assess the result of any given test for correctness, you must be absolutely certain that the input provided to the application is valid.

Software testing variables

Testing comprises three variables:

1) Test object

2) Environment

3) Test data

If you want the testing process to run smoothly, meaning that you only intend to find defects in the software under test, you will need to control and manage both of the other variables. Complicating factors during test execution can arise when you lack control over test data..

To have control over data in any given environment, test data management becomes a necessity. Test data is not limited to one object, environment, or testing type; it impacts all applications and processes within your IT landscape. Therefore, it is imperative to consider test data management and establish a policy.

Test data management strategies

TDM encompasses data generation, data masking, provisioning, and virtualization & cloning. The automation of these activities will enhance the data management process and make it more efficient. A possible way to do this is to link the test data to a particular test and feed it into automation software that provides data in the expected format.

How to: test data management

Not all environments require all activities, but the next components are the basics for most TDM strategies.

Data discovery

Data discovery helps to determine where privacy-sensitive information is located in your database. It also helps in discovering any data anomalies or pollution within your database. Data insight helps select the cases you need for your tests.

Data masking

One of the most urgent components of TDM is the anonymization or obfuscation of privacy-sensitive data. Most data protection rules and regulations prohibit organizations from using personally identifiable information for testing.

Synthetic data generation

Instead of using masking rules, you can replace existing (privacy sensitive) data with synthetically generated data with generation rules. This is useful for names, dates, IBAN, SSN, etc.

Data subsetting

With the use of subsets it gets much easier to give every team their own test database, the need for data storage is decreased and idle times are significantly reduced.

Test data virtualization

Virtual copies are separate from the actual db’s and allow users to work with them in a safe and isolated environment. You can make changes, test them, and run simulations without affecting the source.

Test data provisioning

Both QA and DBA (teams) would benefit greatly from easy test data distribution. A TDM portal makes it possible to self-refresh test data sets at the push of a button. The only waiting time is the actual (technical) data processing time.

Test data automation

If you want to automate your test process, you’ll need automated test data. And for automated test data, you’ll need a test data automation tool. A tool that provides ready-made data, integrated with other software testing tools.

Benefits of test data management

There are several reasons to start with TDM. Frequently heard reasons include:

1. The need to address data anonymization or synthetic data generation due to privacy laws.

2. The desire to expedite time-to-market, which may be hindered by large environments.

But there are way more benefits of test data management:

Find data-related bugs

The importance of test data obfuscation can be found in the fact that 15% of all bugs that are found are data related. These issues occur e.g. data quality issues. Masking data helps you to keep these 15% data-related issues in your test data set to make sure that these bugs are found and solved before you go to production.

Shorter time-to-market

Effective test data management helps in early detection and resolution of data-related bugs. By using realistic test data, you can identify and fix issues before they impact the production environment, enhancing overall software quality.

Faster data refresh

Streamlined test data management accelerates the development process. With efficient data provisioning and management, teams can reduce the time spent on setting up and managing test environments, leading to faster product releases.

Test data management software

Being in control of test data is increasingly important. Subsetting technology allows you to deploy smaller sets of test data to any environment, offering flexibility without size limitations. A robust test data management platform enables you to provide each test team with their own masked test data set that they can refresh on demand. This approach not only enhances efficiency and performance but also serves as a strategic move to help your business grow and become an industry leader.

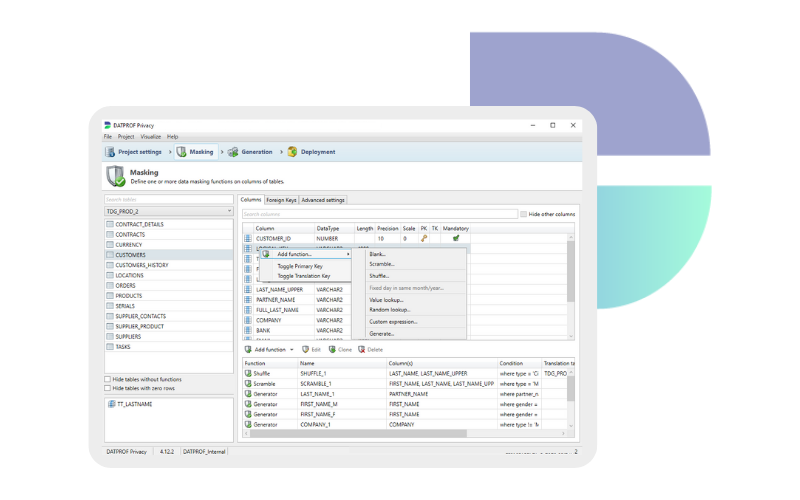

The DATPROF test data platform consists of several modules that helps you modernize your test data management. The foundation of the platform is formed by DATPROF Runtime. This is the self-service portal where the execution of test data projects takes place. In a typical test data management implementation, the most frequently used modules are:

- DATPROF Analyze for the purpose of analyzing and profiling a data source;

- DATPROF Privacy for the purpose of modeling masking templates;

- DATPROF Subset for the purpose of modeling subset templates;

- DATPROF Virtualize for the purpose of cloning and snapshotting.

The DATPROF platform is designed to minimize effort and time during each stage of the lifecycle. This translates directly into its high implementation speed and ease of use during maintenance.

Book a meeting

Schedule a product demonstration with one of our TDM experts

FAQ

What is test data management?

TDM is the process of getting or creating realistic test data for non-production purposes. It ensures that the software testing teams get the right quality test data in a suitable quantity, proper environment, correct format, and appropriate time: the right test data in the right place at the right time.

How does test data management work?

It is the full process of making sure that test data becomes easily accessible and readily available. You can think about if test data can be made available due to privacy regulations, so it needs to be protected first before it can be used.

Another part is how you make test data easily available if you have large databases as we have nowadays. How can you create small agile sets of test data in support of your software delivery process?

About the writer

Maarten Urbach has spent over a decade helping customers enhance test data management. His work focuses on modernizing practices in staging and lower level environments, significantly improving software efficiency and quality. Maarten's expertise has empowered a range of clients, from large insurance firms to government agencies, driving IT innovation with advanced test data management solutions.