Generative AI for Test Data Generation

Artificial intelligence is increasingly becoming an integral part of our daily lives. Sometimes we consciously use it, such as with ChatGPT, but more and more often, AI is employed without us being aware of it. In this article, we aim to explore how artificial intelligence is used for the generation of synthetic test data for software development.

As with many new technologies, the promises are often exciting, but the actual applications may only become apparent after a few years. We have all witnessed how the promise of blockchain was expected to revolutionize everything. In no time, numerous companies started using this technology to solve various problems. Or consider the promise of NoSQL databases, which were expected to replace all relational databases within a few years.

A recent example is the promise of virtual reality headsets that were supposed to change the gaming industry forever. Generally, it takes several years to determine which technologies and applications will stand the test of time. An illustrative example of this is the Gartner Hype Cycle. Currently, Generative AI is at the top of the “peak of inflated expectations.”

How does Generative AI work?

When we use artificial intelligence to generate test data, the software first needs to build a model. Generative AI models, or foundation models, learn all the relationships between attributes based on training data, enabling it to create new data based on these relationships; machine learning. However, there are some important considerations to keep in mind when using generative AI for test data generation.

Black Box

The power of artificial intelligence lies in its ability to learn numerous relationships between attributes in the data. The result of this process is the model that can be used to generate new data. However, this potential also brings with it certain issues. The model actually functions as a black box, making it not always possible to explain why data is generated in a certain way. The more attributes with possible relationships there are, the more complex the black box becomes. It’s possible that the model has learned relationships that are coincidental and have no real meaning, while it may also overlook significant relationships.

This becomes particularly challenging when relationships are spread across different tables. For example, a patient with specific characteristics such as age and gender exhibits a specific medical condition and is prescribed certain medications. All these attributes may be spread across perhaps 10 different tables, each with an average of 15 attributes. Many of these attributes may have no relevance to each other, while others have precise meanings and dependencies on each other. When the AI model generates data that deviates from what’s being present in production, it becomes very difficult to determine the cause. The model then needs to be manually adjusted to prevent such situations.

Quality vs Safety

The greater the discrepancy between what actually occurs in production systems and the test data used for system testing, the greater the risk of overlooking certain errors during the testing process. For instance, if you use AI to generate all test data, it significantly increases the risk concerning quality. What appears to be production data is not necessarily identical. This approach is highly suitable for generating images or texts. However, when applying this technique to generate data that must closely align, the likelihood of errors significantly increases.

Overfitting/Underfitting

During the training of an AI model, overfitting or underfitting can occur. This can result in data that is entirely unrealistic, such as a future birthdates, or in too precise a reproduction of production data, potentially making the data identifiable again. These issues can be avoided, but it requires manual fine-tuning of the model, simplifying the data, or adding additional data.

New functionalities

New functionalities often require new test data that is not available in the production data. The AI model, therefore, lacks model input on which to base this new data. Hence, it is necessary to provide the ability to configure manual generators alongside a model-based generator to meet this need for test data. Additionally, specific edge cases that are not present in the training data but are essential for testing should be generated in a different way.

Legal and Regulatory Considerations

Generating synthetic data based on AI requires model input, often using existing production data. For processing personal data, as defined by GDPR (General Data Protection Regulation), a legitimate basis is required. The regulations surrounding AI and the use of existing personal data are becoming stricter. There may be precedents where using existing personal data to train an AI model is not allowed because there is no valid basis for it.

A significant trend in AI right now is the use of synthetic data as training data. However, in this case, it would be nonsensical as we specifically want to generate synthetic test data.

Security

For many AI-based solutions for synthetic test data, it is necessary to extract production data with sensitive personal information so that it can be processed as training set by the AI model on another system. This introduces additional risks since the data is processed elsewhere, and this new infrastructure must have at least the same security measures as the production databases. Additionally, it is crucial that the software used to read the production data and train the AI model does not contain vulnerabilities that could be exploited by hackers or malicious actors.

Hours and Costs for Model Training

When deploying AI to learn and develop a model for large, complex databases with many tables and data, it is essential to invest in powerful hardware. Training a database with terabytes of data and a complex data model can take a significant amount of time. Furthermore, analyzing the results and any necessary fine-tuning of the model can also be time-consuming.

Moreover, when new production scenarios arise, one must consider whether the model needs to be completely retrained or if new cases can be incrementally added. However, the latter approach is not always possible. Additionally, sometimes it is not feasible to replicate production incidents because the model cannot generate individual cases with specific characteristics.

Consistency Across Multiple Tables and Databases

Many AI-based test data generation software have limitations when it comes to generating consistent datasets for larger data models with many tables and complex relationships, especially when they span multiple databases or applications. Furthermore, relationships consisting of multiple attributes are often not well-supported. In cases where test data needs to be generated for a comprehensive and coherent data system, an AI-based solution is not always the most suitable choice.

Placing Test Data in the Right Location

Another problem is that data generated outside the actual system under test often does not provide a direct means of loading the data directly into the test database. Test databases typically contain complex logic, such as database constraints, indexes, and triggers, which can make bulk data loading problematic. The AI solution usually only provides CSV files or can, in some cases, write data to tables without considering existing constraints, indexes, and triggers. These limitations often need to be circumvented manually or through scripts, which is not always straightforward.

Conclusion

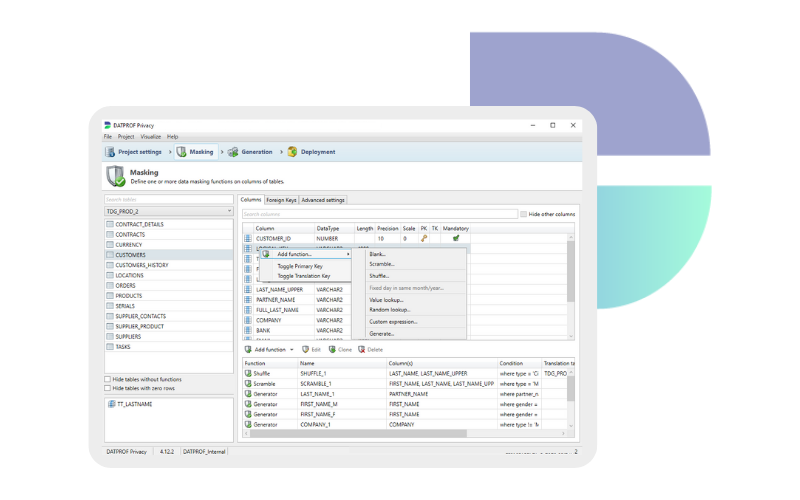

Does this mean there is no application for AI in test data generation? Of course not! It will ultimately become a valuable tool alongside methods such as masking, subsetting, generating, and virtualizing, specifically for certain challenges. It is not a magic bullet that solves all problems miraculously.

An example of an application could be where AI assists testers and developers in understanding the relationships between data. Or generating data with complex relationships within a limited structure of 1 or 2 tables. AI can also be useful for generating large amounts of data based on limited training data, such as for performance testing. With the arise of large language models, you could use such model to generate data based on natural language or even a SQL query. This allows generation without training on production data.

AI is here to stay, but like many new technologies, it is not a universal solution for all challenges. It is a tool that needs to be used thoughtfully and strategically within the right context.

Book a meeting

Schedule a product demonstration with one of our TDM experts