The test data bottleneck

Flexible and fast software development is a competitive advantage. If your organization or the organization your work for is able to release software faster than another organization in the same market you’re king! Right?

All these efforts in Agile, Scrum, DevOps, Artificial Intelligence are all focussed on competitive advantages. We start virtualization or test automation. We use low code software development systems to adapt to new situations quickly. Organizational changes are implemented, like agile software development. And the agile ideology changed organizations. Nowadays everybody is working in teams or tribes. These teams are sometimes even self-regulated. But there is a major problem we often don’t think about: the test data bottleneck.

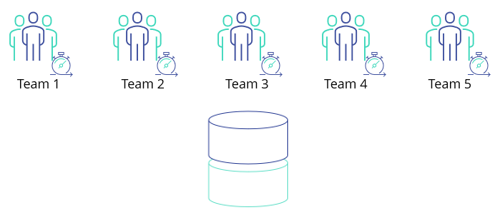

Teams are multidiscipline: software developers, operations, software quality engineers, test automation engineers. And you probably have multiple of these teams. 10-30 teams? Sometimes less, sometimes more. One thing that’s certain is there are a lot of teams. And all these teams are developing and testing and producing software code. Typically there is only one test database on 5-10 teams. And then you’re lucky.

You are losing valuable time, because:

1. You are all working in the same environment on the same database

2. Searching for test cases in 100% in difficult

3. Your test data isn’t managed

Survival of the fittest

Multiple teams are working on a limited number of databases. And that makes sense, because unfortunately many core systems are built upon DB2 Databases or Oracle Databases or if you’re a bit lucky on MS SQL Server Databases. Maybe you’re using Postgress or MySQL. But these are upcoming. These database software systems aren’t free. So creating new large databases is expensive! Large is important here, because in many cases they don’t charge you really based upon the number of databases – they charge you based upon the size. The number of CPU cores is a way to send an invoice, the sizing in terabytes is another way. So size does really matter.

Because of the size there are limited numbers of databases. So you’re working with multiple teams in one database. In many organizations, this means ‘survival of the fittest’. May the best team win. If your team finds the right test data first, your team can start developing and testing based upon the right test data first. Or we see that databases are scheduled. Team 1 may test during period x. Team 2 afterwards and team 3 after team 2. That’s not agile, that’s almost prehistorical and creates a serious bottleneck.

Searching for test cases

Searching for test cases in a 100% copy of production is terrible. As a team you want the right test case coverage. If you have the right test case coverage you’ll have an understanding of the impact before going to production. But having the right test case coverage is challenging. If you have a system which is already multiple years old, than this can become difficult. Because you have a lot of history or migrated data. Finding the right test cases for today or tomorrow is probably easy, but what about the past. A customer from a couple of years ago should have the same service as your new ones. So we need to make sure that these test cases are also covered. And this is already getting difficult, especially if you try to find test cases in tens of terabytes.

We encounter databases with 30 terabytes of data sometimes even 100 terabytes. Nowadays there is an enormous wish of storing data. So finding right test cases in these amount of terabytes…. Well that is a challenge to say at least. No wonder that research is showing that almost 50% of testing time is lost by searching and finding the right test data. And when (or if) you find it, it could be that a colleague or yours just manipulated the test case because you’re working on the same database.

Manage your test data

It sounds obvious, but if there is no management, you are not able to manage your test data. If a test shows some bad results, you don’t know if it was the code or the data. You have two unknowns. Our experience shows that when they start working with test data management, organizations finally get in control of their test data. More control means more certainty. Thereafter you’re more sure that if you’ll find a software fault, it’s a fault in the code. And with that insight you’re able to give better feedback, which means better code, which means faster deployment (or are we going ahead of ourselves now).

Solving the bottleneck with synthetic test data?

A possible solution is to start generating synthetic test data. Generating synthetic test data will help you solving the test data bottleneck. Results will turn green and your test tools will show the results to move to staging or even towards production! But afterwards the trouble starts, because you’ll encounter software defects that should have been found earlier. Because your production data is more complex than your test data generator thought…

Synthetic test data is beautiful and it will help you in maybe a first few tests, like unit tests for example. But for some more durable tests and some more definitive answers, synthetic test data is problematic. Generating test data for a simple test case, which can be given to the front end of a system is okay! But in the back end there are a lot more test cases. A test with adding a new customer is fine, but editing a customers is already more difficult let alone changing a product for example. You would have to generate synthetic test data for all tables. But how many tables does your system have? 100 if you’re lucky. Systems with 2000 up to 10.000 tables aren’t uncommon. And if they have multiple columns, you may have a bit of a challenge. And then we only discussed one system, what about all the other systems you’ll probably have…

If you want to solve the test data management bottleneck, you’ll have to use a combination of multiple techniques. You’ll have to use:

- Subsetting technology for creating smaller sized environments;

- Masking technologies to become compliant

- Synthetic test data to create non existing test cases

Subsetting your test data

Subsetting has all the benefits and only a small number of drawbacks. The benefits of subsets for the software quality process are:

- Possibility of creating new database environments with low costs;

- Creating new environments with subsets of test data in minutes;

- Have influence on your test data in your test environment;

- Have representative test data cases in your environment;

- Reduce data leakage problems;

- Increase the speed of test automation;

- Less downtime due to waiting on test database.

We see it happen all the time. Software development teams depend on procedures and database administrators before a new test database is deployed for testing and developing. If a refreshment is even a possibility. In many cases the refreshment of a database takes up to 3.5 people and almost a work week before it is deployed. So a lot of time is wasted. The time wasted before a database is refreshed is partially due to sizing. Because due to size it takes time. But it also takes time because you must have clearance to copy a database for testing purposes. DBA must make some time to make a copy. But it is not their core business, so they don’t prioritize it to your needs. So there a waiting game starts.

Representative test data

We’ve seen teams working with a 10 year old test database. 10 years. That’s no joke. You might be thinking, is that a problem? Well maybe not. But we are in the business of creating software of the highest quality possible. We want to make sure that software has the right level to perform in production. Is a 10 year old test database compared to production representative? Or a 5 year old? Or a 1 year old? It should be helpful if with every release to production a new test database is created. So desirable every day if you go to production every day. Briefly it should follow your release calendar. But even if we do so, refreshing a large database takes a lot of time…

Restoring 10 TB takes time, only the process itself can take up to 12 hours. So in the end – even if we eliminate all procedural processing – we still keep a process of at least 12 hours. How can we still improve these numbers? Subsetting is a very interesting next step. It can reduce databases to only 1 promile percentage. So a 10 terabyte production database becomes 10 gigabyte. How about that? How fast can you restore a 10 gigabyte database? This has an enormous impact. With the possibility of reducing storage with subsetting you are now able to restore and backup databases within minutes.

The last part of the bottleneck

To be completely honest there is one problem left. Because is a subset 100% perfect from the start? The answer is no. That means that even with our solution the subset will not be 100% perfect or representative for production. But we are able to give you control. If analysis leads towards a data problem, you’re now able to easily adapt. You can easily add new test data cases to your initial subset. So every new subset run, the test data that was missing in the first run is now available in your subset. With every run you’re getting more and more confidence that you’re able to go to production with every test.

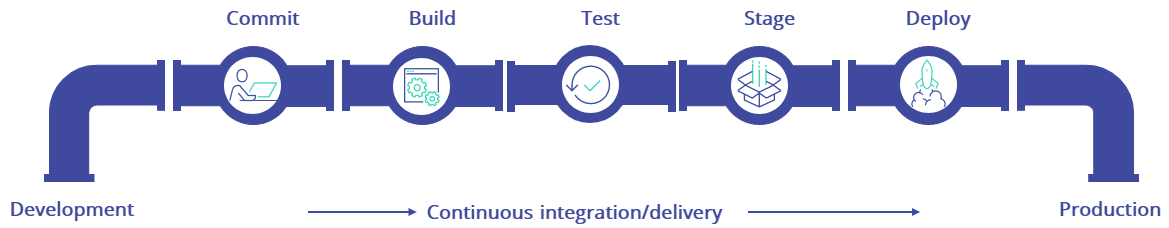

Masking your test data

In your search for the best test data in a DevOps world, we end up at data masking. Because using a subset, means using production data. After all, using production data is the most representative way of making sure that the developed software is from the right quality. But we can’t use production data anymore. Due to GDPR and other regulations we’re not allowed to use production data with privacy sensitive information. So you mask it. And in what order you execute these steps is also important. In many cases we advise to start with masking and subset based upon the masked database. The big advantage in this is that all your subsetted databases already contain masked data.

Masking test data can be challenging. The real challenge is keeping test data as representative as possible and make sure that you’re not able to retrieve the real individual. So adding the right masking rules to data is crucial.

It’s a DevOps world

The last step in solving the test data bottleneck is the use of synthetic test data. It solves the last important step towards a DevOps world. Because with synthetic test data your software development team is able to perfectly align their test cases with their test data. New products can be generated, new strange last names can be deployed, new IBAN bank account numbers can be generated. And based upon synthetic test data they’re now able to perfectly align the tests with the test data.

Now you’re almost there. 1) You are able to deploy test data in minutes with subsets, 2) you are able to mask test data to become compliant and 3) you are able to synthetically generate test data to perfectly align test cases upon your tests and your test data. The last step is make sure you’re in control of the deployment moment. You’re not dependent on 3.5 people. But you’re able to automate all these steps. Your team is finally in control.

Our TDM Tools Get The Job Done

DATPROF enables software teams to ship their software faster with better quality

Discover & Learn

Discover your data and gain analytics of the data quality by profiling and analyzing your application databases.

→

Mask & Generate

Enable test teams with high quality masked production data and synthetically generated data for compliance.

→

Subset & Provision

Subset the right amount of test data and reduce the storage costs and wait times for new test environments.

→

Integrate & Automate

Provide each team with the right test data using the self service portal or automate test data with the built-in API.