Data analysis in software testing

Test data plays a major role in software development. When you test your applications, you need data. This data needs to meet certain requirements in order to ensure that your tests are reliable and successful and that your developed software is of the highest possible quality. But how do you compile this test data? It all starts with data analysis or data discovery. What exactly these terms mean and why it is important is explained in this article.

Test data analysis vs. test data discovery

Data analysis is a very broad term. According to Wikipedia it can be explained as “A process of inspecting, cleansing, transforming and modeling data with the goal of discovering useful information, informing conclusion, and supporting decision-making.” Data analysis is used in many different branches.

Data discovery can be explained as “The search for specific items or patterns in a dataset.” Because this term is somewhat more specific, we use it more often in the Test Data Management (TDM) area. Data discovery is a fundamental part of establishing the right TDM strategy.

The purpose of test data discovery

When you know what’s in your database, and only then, can you come up with the right strategy for your Test Data Management. Data discovery helps determine where Personally Identifiable Information (PII) is located in your database. It also helps in discovering any data anomalies or pollution within your database. With this knowledge you can make the right choices in 1) your data anonymization strategy and 2) your data provisioning strategy.

1. Data discovery for your data anonymization strategy

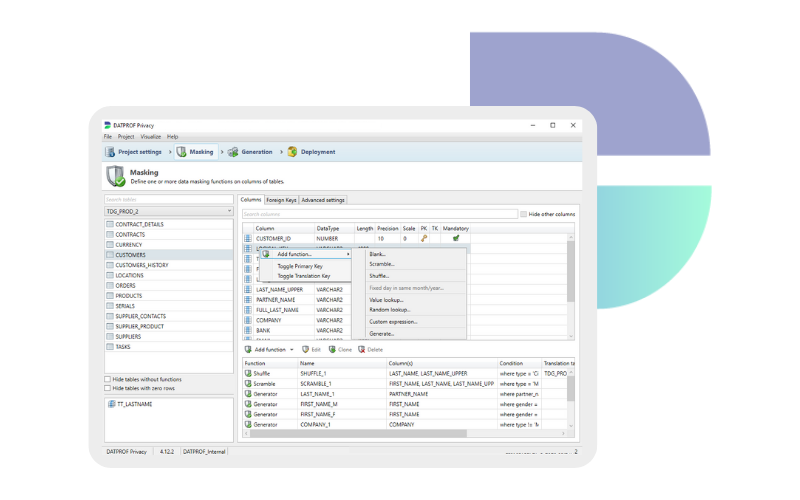

Is there any data that needs to be masked? Or do you need to generate synthetic test data to add missing test cases? Data analysis or data discovery helps you to get answers to these questions. Profile your databases to discover where and which privacy sensitive information is stored.

2. Data discovery for your data provisioning strategy

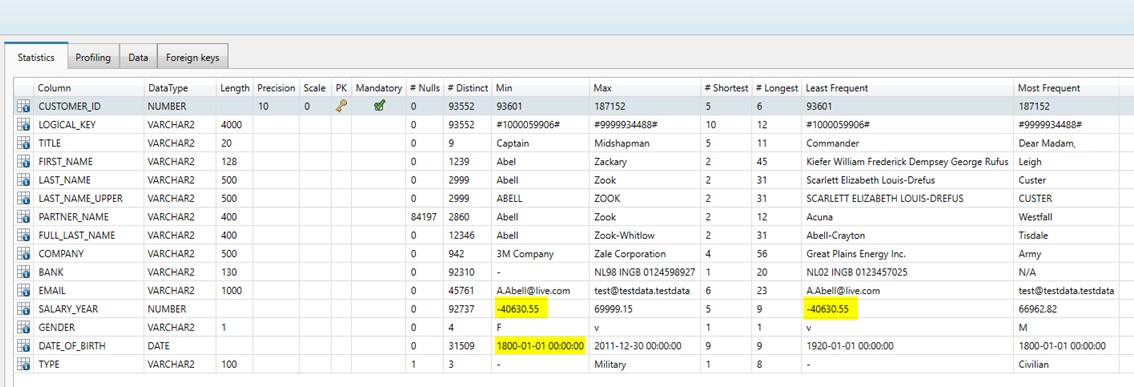

For a proper data provisioning strategy, the first step is to know your data. Use a data discovery tool to generate full data statistics to find and discover data anomalies (e.g. extreme long records, missing records or date of births that are in the future). Visualize data dependencies and understand how data is interconnected. This helps selecting the cases you need for your tests. Especially when you’re using subsets of data, it’s important to keep those anomalies or pollution in your test datasets. This ensures that the test dataset is representative and applicable for your specific test purposes.

How to perform data discovery

It’s important to gather a broad ranged data analysis when designing your test data provisioning and masking strategies. As an example:

The analysis above highlights data anomalies (highlighted) as well as showing useful statistics such as null percentages, distinct value counts and typical data bounds.

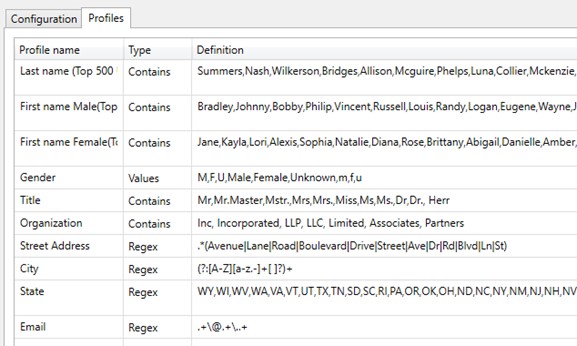

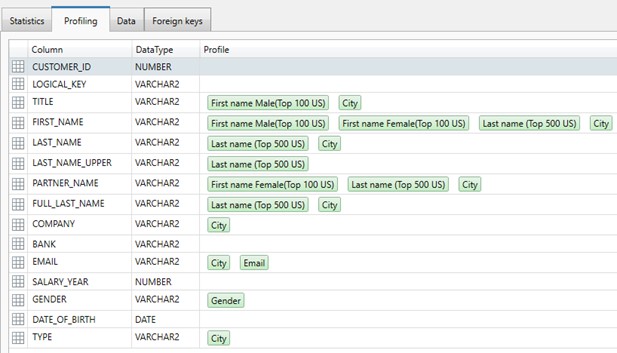

It’s this information which can be profiled for PII data content given your own, or presupplied, selection criteria:

Using such selection criteria, the results of the analysis can be profiled to give us a good indication on where there’s a match:

Note : In the example above, the City evaluation is a regular expression example ‘(?:[A-Z][a-z.-]+[ ]?)+’. (basically a text string lookup) which provides a positive result in many columns. Some would argue they are false positives, given the context (column) within which the match is found. The vast majority of the columns test correctly but there is NEVER a guarantee that false positives or negatives will not occur. The real point is that these results have given you proximity and a powerful hint that the table needs further investigation. It is this due diligence that most data protection legislative frameworks refer to in supporting recitals.

Test data discovery tools

There are many data discovery or test data analysis tools, like a PCI data discovery tool or Oracle’s Sensitive Data Discovery. All TDM analysis software has its own pros and cons and suit certain purposes. Our Data Discovery tool ‘DATPROF Analyze’ is a widely applicable tool with which you can build and analyze full data statistics. It can profile the data for finding privacy sensitive data, visualize data dependencies and export and distribute a findings report. In short: it helps you to put together a high quality test dataset for your testing and development purposes.

Book a meeting

Schedule a product demonstration with one of our TDM experts