Data insight

Inspect privacy sensitiveness, size & quality

How valuable would it be to have insight in your organization’s data sources? Would you treat a source differently if you only need 10% of its data? Does your source contain privacy sensitive data and where is it stored?

Time to get insight in your data!

Do you know your data?

Data landscapes typically consist of various data sources, such as CRM, ERP, or HRM systems. Most organizations maintain multiple systems, often with multiple copies of each. Do you have any idea about the level of privacy sensitivity associated with the different sources in your environment? Some of them probably contain privacy-sensitive information more than others. Because you may not have a clear understanding, it is essential to inspect these sources and determine whether they indeed contain privacy-sensitive information and where it is stored.

DTAP environments

Software teams use DTAP (Development, Testing, Acceptance, Production) environments for development and testing purposes. In these environments, multiple copies of various data sources exist. Therefore, it’s not just the production data that needs attention; it’s also all its copies—sometimes as many as fifteen copies of a single system.

For example, if you examine a CRM or ERP system, you’ll find numerous copies for testing and acceptance purposes, sometimes multiple copies of each system.

Explore the source

Occasionally we see sources that are not privacy sensitive, or that databases are stable in size (not growing) and which is just a single copy. That’s nicely straight forward and easy and often we don’t have to take any actions there. But more often we see the need to explore these sources. The way we explore sources is by generating statistics of the source and then do data profiling. This data profiling gives you an understanding of the level of sensitivity of the different tables and the information.

When we generate statistics, we are really looking for striking, unusual data in sources. This can be anything from remarkably long names, special characters or negative salaries. It is always interesting to see how data sources are filled with different types of data. If you explore your sources, you’ll probably discover anomalies that you never would have thought of yourself like names (privacy sensitive data) in comment fields for example.

Get in control

Data keeps changing. That means you need some kind of reporting in place; a time stamp or other information at a certain point in time about the state of a data source. The availability of statistics and data profiling gives you control over the source. Being in control enables you to develop a strategy on how to handle the source.

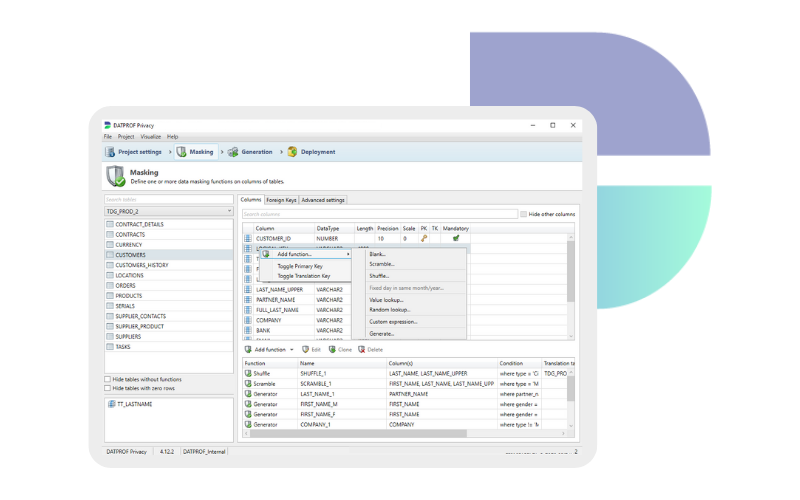

Test data insight and control over the source is needed for every test data management process. You can use it as input for your data masking efforts to anonymize the source. Or you could decide how to reduce the size of the source, for a subsetting process for example. It’s also useful for dealing with data quality or other data related questions. It’s valuable to be able to understand the sensitivity and to use these insights to create a strategy around the expansion of the source: would it continue to grow or is it stable in size?

Dirty data

With data insight you have the option of cleaning the source – you can get rid of all anomalies. But if you’re busy with anonymizing a privacy sensitive source (for test data management purposes), you want to do the exact opposite. In that case you want to make sure that all data quality issues are kept intact after anonymizing in order to test with as much as possible ‘production like’ data. For example: a typical last name can already be a privacy issue in itself. Another issue can be that after a data migration ‘interesting’ data remains in your databases. These issues can require different approaches to apply different masking rules. So it’s really about knowing your data and getting in control.

Conclusion

If you have the availability of this information – statistics, profiling – and you know where interesting data is stored in your source, then you have insights and you can define actions to improve it. It also creates value for building subset and masking templates. So if you want to open the black box, you need insight in your test data.

Also watch our our corresponding webinar “How do you explore your privacy sensitive data sources?”

Book a meeting

Schedule a product demonstration with one of our TDM experts