Test Data Automation

DATPROF’s mission is to improve the accessibility and availability of test data for software engineers – developers, testers and QA, as well as the Training team. Our motto is “The right test data in the right place at the right time”. We covered this in our article about Continuous Test Data which fuels the demand for Test Data Automation. This article shows how Test Data Automation helps achieve the ambition of faster software development by automating the deployment of test data sets.

Every project needs test data. This need might, and probably will, differ between projects. Sometimes transactional data is the core need – all of the invoices for the last 6 months. Sometimes it’s more a representative sample of the customer base – all of the customers in “this list” with their associated transactions. But getting hold of test data for your project can sometimes be a struggle, a challenge, maybe we should really call it what it is – a problem. So how do we overcome this problem? This is where Test Data Automation will help!

Why test data automation?

One of the most important reasons why test data automation should be on your backlog is that once you’ve implemented it you will optimise your release delivery cycles tremendously. Research shows us that a significant aspect of software development time (combined development and testing) is lost waiting for the right test data to come available. On average, waiting for the right test data takes up more than 5 working days, FIVE! Based upon our experience we think that even this might be optimistic. We’ve seen that getting the right test data sometimes takes several weeks…

So why does it take so long to get a test data set? The most important elements are:

1. The time before the process is actually started

2. The time it technically takes to execute a refresh

It is important that we know these problems, because if we understand them we can solve them.

The test data refresh problem

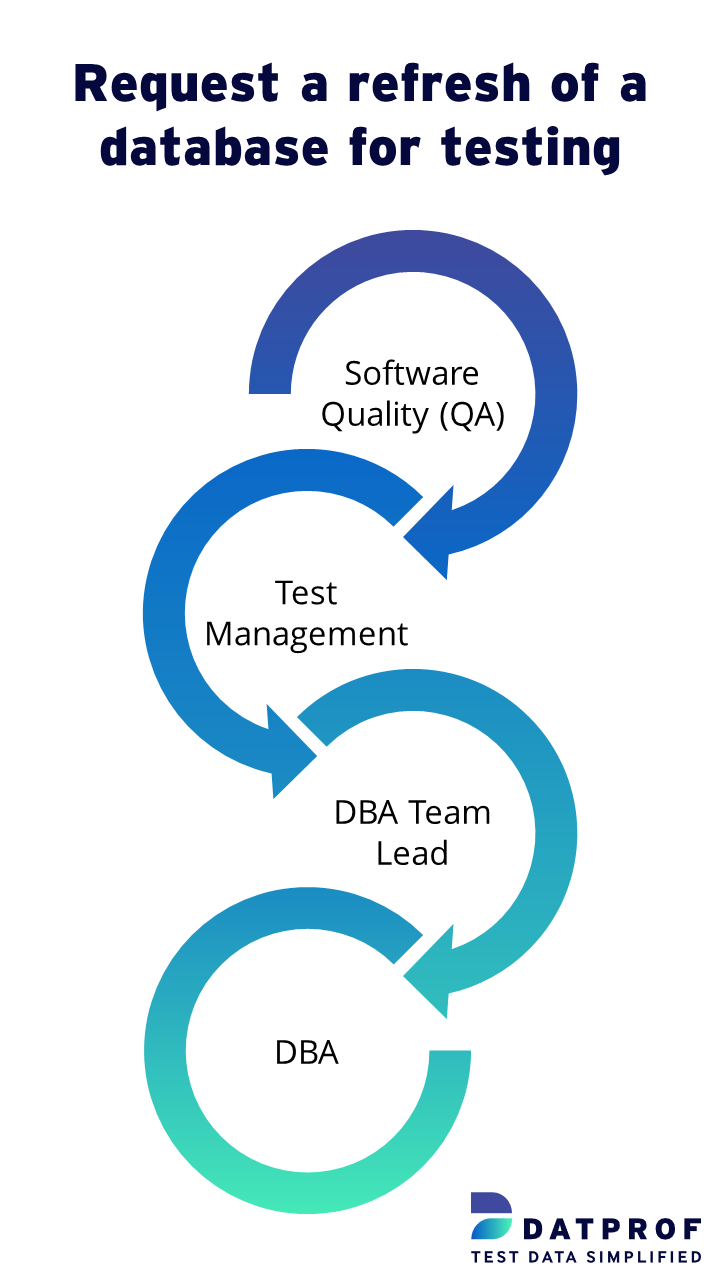

From experience we see that at least 3 or 4 people are involved in refreshing a test database. Why? What roles do they have to play?

Let’s start from the beginning. During a development project the initial demand for test data comes from the developers and testers. They need test data for their automated testing and they also want to have a degree of control over when a new test database is to be deployed. Production and non-production (lower environment) databases are managed by the database administrators – a simple statement of fact. They are, and always will be, the guardians of the data realms.

Handing over control of the lower environment databases to Dev and QA Engineers is not something which is done lightly. Lots of questions are centred upon these databases. How big will they be or, more appropriately, how much space do they need? Remember, there’s a direct correlation between “space” and “cost”. How volatile will this environment be? Will the need to refresh be a primary concern so that the team can quickly revert back to a known development or test point? Clearly, before DBA would even consider handing over control to others, a broader debate should first take place.

But why does it take so many people and so much time? One of the reasons is that it is NOT one of the primary tasks of many DBA’s. Often the replenishment of the lower environments takes second place to production tasks.

It makes sense that the core database functions, which keep the business alive and profitable, come before the application development and delivery cycles. But delaying the delivery and deployment cycles has a direct, and often significant, cost to the business. The later the development and test cycle, the higher the cost associated with problem identification and fix.

What is the process for requesting a database refresh and how will automation help? The process in most cases looks a bit like this:

- Initial request for a test database from development or test to management;

- Management checks the request and, if agreed, forwards it to the DBA Team Lead;

- DBA Team Lead checks the request and delegates a DBA to deploy the database copy;

- DBA executes and delivers the copy.

Given the above comments you can easily see how the process can become people intensive and time scheduling/priority dependent.

Test data provisioning

If only Development, Test and QA could manage their own test data, then a part of the ‘problem’ is immediately solved. The ability to deploy a test database refresh on demand immediately removes the multiple layers of request and authority inherent in the traditional environment. By its nature this means that the deployment time is specifically limited to the time taken to effect the refresh.

But what is needed to get a process like this in place? It’s entirely practical to think that two of the DBA functions could be handed to the lower environment users with confidence. First is to make sure that the deployment is executed with zero threat to the source database(s). The second is to ensure that the deployed database conforms to data protection regulations and has a footprint which is within reasonable bounds. In other words, the use of database subsetting and masking techniques are an inherent part of the process. Compliance and good housekeeping techniques are implemented by default.

In our experience DBA’s like to know what DATPROF Test Data Management does and how it executes the various tasks – masking, subsetting, automated deployment. It doesn’t take long for this understanding to be realised and quickly manifests as a set of deployed projects which will deliver the two core concepts.

This means that DBA’s can confidently adopt a supervisory role in lower environment data base deployments!

Test data Automation Tools

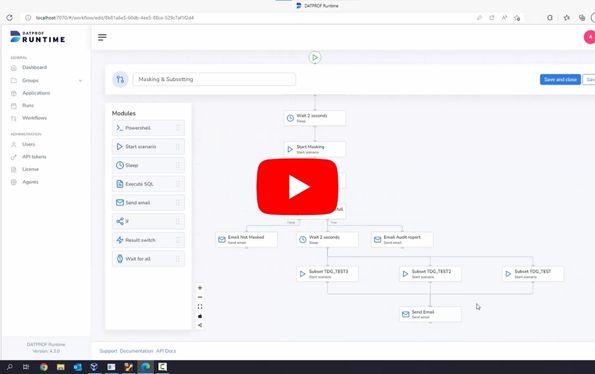

Once developed, DATPROF applications can be automated using the DATPROF Runtime module. This easy-to-use service request broker can be accessed either through an intuitive browser interface or API managed and manipulated by Automation Servers or Job Management Systems. This means that it can be easily integrated with devops automation tools such as Jenkins, ServiceNow, Xebialabs or the Atlassion suite.

The workflow feature makes it possible to easily drag-and-drop modules to automate and integrate every single part of the test data provisioning pipeline.

As importantly, the interface can be configured to control, or restrict, user access to the masking and development projects. This means that a self-service delivery mechanism can be quickly deployed, with confidence. The end result is that you satisfy the test data bottleneck by delivering to your users the right test data, at the right place and at the right time. You’re giving them what they want, when they want it!

Having one high-performance Test Data Management portal is crucial in the test automation approach. Imagine that you can execute and monitor test data subsetting, masking and generation from one single portal for all your tests. From this portal you can deploy test data to multiple teams and environments at the same time. Portal users login and refresh their own test data environment and they execute multiple processes. It’s the most efficient way of generating test data you can think of.

DATPROF Runtime

![]() Refresh environments using a self-service portal

Refresh environments using a self-service portal![]() Automate processes with events and triggers

Automate processes with events and triggers![]() Build extensive workflows using drag-and-drop

Build extensive workflows using drag-and-drop![]() Integrate it into your CI/CD Platform using REST-API

Integrate it into your CI/CD Platform using REST-API![]() Integrate within using Bamboo, Jenkins, SeviceNow and more

Integrate within using Bamboo, Jenkins, SeviceNow and more![]() Roles and permission management with active directory integration

Roles and permission management with active directory integration

The impact of a subset

Creating or refreshing a database takes time. As mentioned at the start of this article, the creation itself, copying a database, takes time. This time can be improved in many ways: more CPU power, more memory, faster I/O subsystems would always help. One simple fact remains: the bigger the source database, the more time it takes to create a new database in the lower environments. Size matters. It follows that working with subsets of production databases will have a significant impact on the time taken to deploy to these regions. The smaller the database, the quicker a database can be created. Why subsetting is so relevant is discussed in our post about the data subsetting solution.

Watch DATPROF Runtime

Technical Product Demonstration

Learn how to automate the masking, generation and subsetting of your test data so you can use it for development and testing.