Test Data Management

Improve your software delivery process

There’s an ever-growing need for software development to be better, faster, and affordable. With the rising end-user demand and unrelenting competition, implementing a proper testing strategy is critical. An effective testing strategy includes a range of components, including is Test Data Management (TDM).

Test data management is one of the big challenges we face in software development and QA. It is important that test data is highly available and easy to refresh to improve the quality and ultimately the time to market of your software. Another reason why TDM is a challenge is legislations like the GDPR. So how do you manage your test data?

What is test data management?

First, let us have a unified understanding of the term: TDM is creating, managing and provisioning of realistic test data for non-production reasons, like training, testing, development or QA. It ensures that the testing teams get test data of the right quality in a suitable quantity, proper environment, correct format, and appropriate time: the right test data in the right place at the right time.

The need for test data management

Almost every developer is convinced you first have to test any new product to know if they live up to the expectations instead of ruining the name of the company releasing instable software. For that reason, test drivers do an endless amount of laps driving around new concept cars. And in the same way software testers are trying out the latest versions of new applications.

In order for an application to work it is in need of fuel, just as a car is. An application is built for processing information, data. No data means no processing. Which means there is a need for test data management: the fuel of an application in a test environment.

The attention for test data is however surprisingly low, as the tester will gather the data as needed for suiting the test cases to be executed. The application is in a test environment, and in that particular environment data is present, which means the tests can be executed. If only it would be as simple as that…

To make a final comparison with a car; if you pour diesel into a petrol powered car, you probably won’t end up far. If on top of that you don’t know you made that particular mistake you probably end up taking the engine apart in an effort to find out why it is not functioning properly, only to end up finding out it was due to the wrong fuel. Test data can be just like that. To be able to assess the result of any given test for correctness, you need to be absolutely sure the input given to the application is valid.

Software testing variables

One can say testing is made out of three variables:

1) test object

2) environment

3) test data

If you want the testing process to run smoothly, with which is intended that only defects are found regarding the software under test, you will need to control and manage both other variables. Complicating factors during test execution will arise when you are not in control of test data.

To be in control of data in any given environment test data management is a necessity. Test data is not limited to one object, environment or testing type, but influences the whole of applications and processes in your IT-landscape. It is therefore a necessity to think about test data management and lay down a policy.

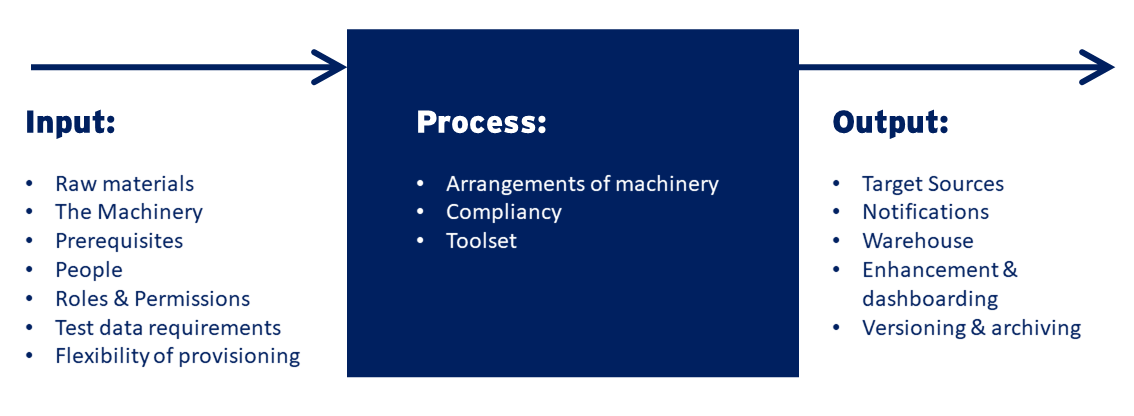

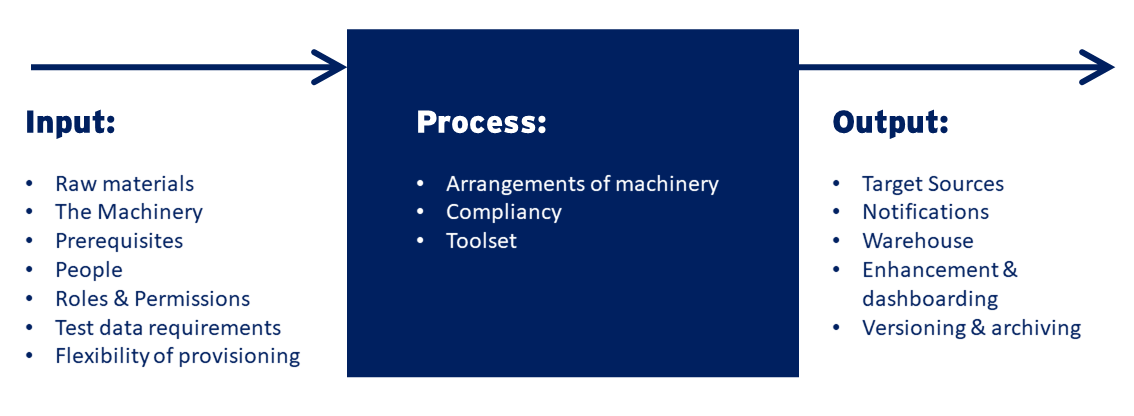

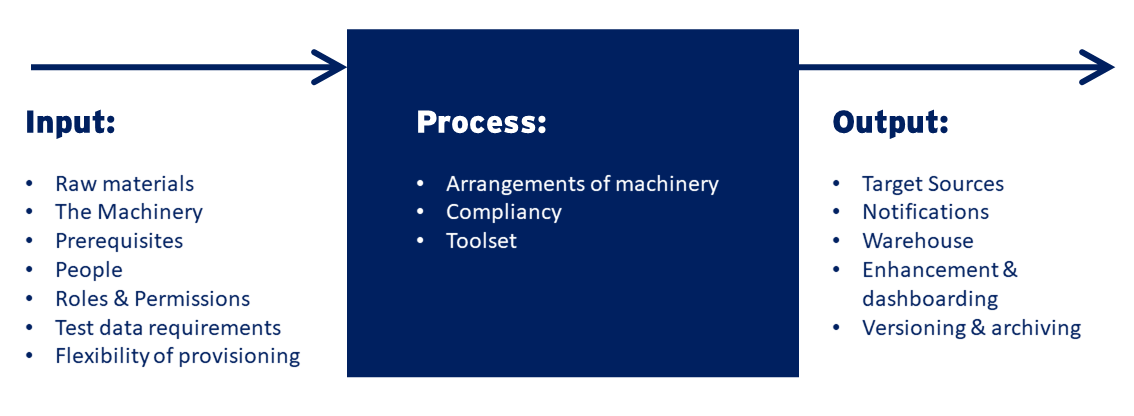

Test data manufacturing

The creation of test data is often a black box. The output is the result of what happens in the black box and the black box can only deliver the requested test data with the right input. But what do you need for the output, the actual process or the input? The answer lies in ‘Test Data Management’.

Let’s use the example of a car factory. The output of that factory is a car. But for that output you also need a warehouse, where the produced cars can be stored before they go to the customer. When enough cars are built, logistics should be getting aware that there are cars ready for transport, because the warehouse is not unlimited in size. The car factory becomes even more interesting if it should be able to build all kinds of cars, e.g. not only a hatchback but also SUV’s.

If we were to build a test data factory, what kind of factory would that be? Would that be a factory that delivers only one type of test data or different sorts? Should it be possible to meet specific requirements of team members (do they want a hatchback or an SUV)?

The next paragraphs explain the management of test data, starting with the output. When we’ve figured out the output and have a clear picture of our expectations of test data, we know what kind of input is required. Then we can design a test data factory that works.

Availability of the Target source(s)

After the execution processes have taken place, we have got test data which should be delivered in an environment. So, what we need is some sort of logistics that enable you as a person to get the test data in the right place / source (database or flat file). This should be managed properly because test data cannot be inserted at any given environment.

It is important that target sources are available before a test data execution process has finished. What is also important is to be clear on how many test environments are needed. This is more a test data architectural decision, But as an individual requesting a test data set, you should be able to determine where you want the test data delivered, in which target environment.

Notifications

A factory does not build a car instantly and the same applies for test data. Every single step in the test data factory takes time. It is therefore important to stay well informed during the process, with notifications for example. When the process takes longer than expected or if an error occurs you want to know as soon as possible to avoid wasting time. The simplest management action are the ‘done’ ones. If the process is executed correctly, the requesting party gets a notification that the test data is ready to use. In your test data factory this notification can be an email or a text message. With the help of (rightly timed) notifications you can keep delays to a minimum.

Data Warehouse

At the end of the process, you will have a delivered set of test data which can be stored in a warehouse. The size of this warehouse shall at least be dependent on the reliability of the delivery process and the time to produce test data sets. Because the more reliable the process, or the quicker you can produce, the smaller the warehouse must be. And the less reliable or the longer it takes to produce, the larger the warehouse must be. If your test data processes take several hours or if there is a chance they might crash, you probably consider a “back-up” strategy. If it is a seamless and fast process, maybe you do not need such a strategy. So, the size of your warehouse depends on how fast and valid new sets can be delivered.

Enhancements and dashboarding

Like every single organization or factory, the perfect factory (if it even exists) probably is not the first factory you have ever built. So, getting to a more reliable and a better performing factory is a process. It is not something that is achieved overnight. But creating such process is rather beneficial because you do not need an expensive ‘warehouse’. An important aspect in building a test data factory is the refinement process. For this you need proper logging, error handling and dashboarding. If the test data process has issues, you need to be able to quickly see it and take measures. Logging of your test data processes is essential to know what happened. If the factory is underperforming you want to know why, you want to detect the bottleneck and solve it. In your test data management team there should be experts in these areas. A person who can analyse the logging, understands it and improves the process.

Versioning/archiving

What about recurring test data requests? So the user asks for the exact same test data set multiple times. If you should prepare your factory to this request the initial delivery of the test data set might have teething problems. But after the initial runs these problems should be solved and then the distribution should be an easy job. To create such a delivery process we need to document or archive how we created the test data set or you could archive the actual test data itself. We should also understand how long we should keep these documents. How long after the introduction of a new car model do you need to keep the documents of the older versions? If you are currently occupied with a specific IT project, the need for quick refreshment might be valuable. After it’s finished, maybe you want to keep some information, but the distribution of test data in this project is getting less important.

Raw materials

So we have discussed the output, now we need to define what the input should be to get such an output. To start a process in a factory you will need goods. In the test data factory we need data sources, these can be databases or flat files or other sources that contain data. We need data sources which we can process to get test data. These sources may be empty or can be pre-filled. If they are pre-filled, it will probably be filled with production data and if they contain private data you might need to get these compliant. If we have these data sources we can process the data and deliver test data.

The machinery

The processing of the data is done by machinery. We therefor need the proper machinery installed and configured. The type of machinery might differ. If you for example look at the first “Ford assembly line” you could choose any colour as long as it is black. In such a circumstance you probably will not need much machinery due a low complexity level.

‘Any customer can have a car painted any color that he wants so long as it is black” – Henry Ford

But if you want to manufacture multiple different kinds of cars, probably you will need different types of machinery. So in IT, if you have a single system, you will probably be fine with a less sophisticated solution, but if you have a hybrid IT landscape, probably you need more than one type of machinery. Or at least machinery that enables you to the distribution of test data for these different kinds of systems. Maybe you should consider a best of breads strategy.

Prerequisites

Before you can start the machinery, you might need high-voltage power in your factory. So you might need to fill some prerequisites before the factory can start producing. This also applies to test data. Before you can start the actual process you will need the certain permission rights to execute test data processes. So before you can manipulate the raw materials, you will need to configure everything in such a way that it can be manipulated. So some of the pre-processing settings should have been arranged. This needs to be managed, and this is something you want to arrange only once.

Skilled People

Now we have talked about several elements, but what about skilled people? Well that is certainly one thing we need since they will run the machinery. Your test data team should be able to work with the machinery to create a sets of test data that is based upon certain prerequisites. To fulfil these needs, the (test data) engineer should understand data or more specifically he or she should understand databases or sources. Writing an SQL statement is a much valued skill in your test data factory. But test data engineers cannot do everything on their own. They should have some assistance of a team with system administrators and (business) analysts.

Test data requirements

Test data engineers should be able to create test data that fulfils the needs of the end user, the software development and/or software quality team. To create the right test data the test data engineers need the software quality team to define the requirements of the test data. It is like, if you are selecting a new car, with a car configurator you are creating your own car, you select your personal wishes or needs. For test data management you should have a test data configurator that helps you define the needs of your test data. These needs can be based upon the test cases you have been developing or based upon a data profile.

This is an important one, because we see that end-users and the ‘test data team’ have a lack of sense of the each other ‘problems’. Bridging this gap of understanding is important, therefor training for both sides might be required. Let me try to explain with a simple example:

The test team wants a test case with two car insurance policies on a single account, one policy should be of a car older then 30 years and the other should be a newly bought car younger than 12 months. Perfectly fine test case, right? However, the ‘test data team’ might know that there are more interesting test data cases. Should they just give the team what they want? Or should they act like a consultant? In the end this also reflects if you have knowledge about what kind of (test) data anomalies there are in production. For getting the right level of software quality these questions might be very valuable.

Flexibility in provisioning of test data

And this is where the factory actually starts producing. The customer requests and delivers test data demands. But how should it be delivered or provisioned? So after the requirements are approved and the test data is ready, how do we provision the test data to the customer? We can notify the customer when the test data is ready to use. But this could also be delivered automatically. So instead of going to a test data configurator you would also like that test data can be delivered via API. With the help of this API it becomes a truly automated delivery process which can be integrated into your assembly line. So, your test data factory becomes piece of a bigger production chain; you can integrate it into your CI/CD pipeline.

the arrangement of machinery

Depending on what kind of an assembly line you are trying to build you might need different types of machinery. The assembly line is dependent on the output. For example, if your software development is based upon waterfall methodologies, you might need to have machinery which can deliver specific test data at a certain moment, while if you are more agile, you probably are more interested in flexibility.

So in the first one, you want to create a process flow in your factory where delivery time can be promised. To get this, you will probably say that every station will create a small piece of the end product, but everybody has its own expertise. While in a more agile organization in every station of your factory somebody or a department is building their own the test data sets. So based upon your organization you have to rethink how test data should be developed/created.

Test data compliancy

During the process compliance should also be considered. If you are using sets of production data in your factory which contain privacy sensitive data, you need to get compliant. But at what point do you want or need to get compliant in your production process? At the beginning or at the end of the process?

There is also a need for auditing. Organization want to make sure that after the process is done, it can be checked how it is done. So your test data factory logging and auditing is important. Not only for the data protection officers, but even more so to improve the process step by step. Because having a factory is one thing, remaining and improving it, might be even more important.

TDM toolset

Depending on what type of test data factory you want or need you can choose several types of tools. If you have privacy sensitive information then you will probably need something to get compliant. Therefor you can think about data masking or synthetic test data solutions. So this should be then one of the tools you need.

If size is slowing you down in the speedy delivery of test data, then probably you need a solution to reduce the size so you can quickly refresh these environments. You are not always in the needs of both. For example, when you do not have any privacy sensitive data stored but you do have a large database, then you will only need a tool to reduce the size of your test data set. But if you have a small database which contains privacy sensitive data, then you might only need a data masking or synthetic test data generation tool.

The last interesting piece for the toolset is an inspection tool. Before you start the test data manufacturing process, you want to make sure that the delivery of the goods are of the right quality. Therefor a data discovery or data profiling tool might be very valuable to understand the raw data materials that enter the actual process.

Implementing your TDM process

Many challenges can complicate the TDM process. Resource consumption, protection of private data, storage, potential data loss, timely data reversions, and test priority confliction are just a few examples. When overlooked, these challenges can cause significant setbacks. Luckily, an effective TDM implementation can resolve these challenges. Let us now look at five ways to implement your test data management process effectively.

Planning

This is where you define both TDM and the data needs for data management. Then, you prepare the necessary documents, including the list of tests that need to be done. Once everything checks, you can form the test data management team and sign off the relevant plans and papers.

Analysis

The analysis stage’s main activities are collection and consolidation of data requirement, access, checking policies around data backup and storage.

Design

Here is where you determine the data preparation plan. Design involves identifying data sources and providers and areas of the test environment that require data loading and reloading. It is the last stage before implementing the test data management strategy – so any other pending plan should be created here. Those include test activities, data distribution, communication/coordination, and document for the data plan.

Development

This is where you build or implement the TDM process by executing all the plans from the previous stages. When needs be, you can mask your data at this point and back up all data.

Maintenance

Once you implemented the TDM process, you have to maintain it too. In this case, maintenance does not just involve identifying and fixing issues with the test data management tools and process but also adding new data or responding to requests to update existing test data when necessary.

Test Data Management strategies

Test data management techniques

TDM encompasses data generation, data masking, scripting, provisioning, and cloning. The automation of these activities will enhance the data management process and make it more efficient. A possible way to do this is to link the test data to a particular test and feed it into automation software that provides data in the expected format.

Automation

More and more businesses are automating repetitive processes and tests. They also automate the creation of test data – something that you should do too. By automating your software testing procedure, you save your team time, reduce business expenses, and improve the feedback cycle. You also get better insights, higher test coverage, and a chance to bring your products to the market faster.

Identify and protect sensitive data

In most cases, you need large sets of relevant data to verify or test applications. This data comes from end-users through your apps or websites and go to the quality analysis teams for test cases. The information must be safeguarded against any theft or breach, especially in the development process where personal data like bank details, medical history, credit card information, or phone number can be exposed.

It is possible to simulate the test data to create a real environment that can further impact the outcome. Masking the data from production makes it possible use real data for testing applications without violating the regulations. Masking gives you a realistic picture of the production environment, allowing you to determine how the app will work in a real-world setup.

Exploring the test data

Data comes in all forms and sizes and can be distributed across different systems too. Your respective teams have a task of filtering through these data sets to find what matches their test cases and requirements. In most cases, they will need to find the right data in the specified format within a certain timeframe.

But locating and retrieving data manually is a tedious task that might lower the efficiency of the process. That is why it is vital to introduce a test management solution that facilitates effective data visualization and coverage analysis – like TDM.

TDM plays a significant role in the testing industry. Behind its uptake are significant financial losses from production defects, time loss, lawsuits, and lack of precision and accuracy. Years back, test data was limited to a few sample input files or a few rows of data in the database. Today, companies depend on robust sets of test data with unique combinations that yield high coverage to drive the testing.

Benefits of Test Data Management

There are several reasons to start with TDM. Frequently heard reasons are 1) we need to do something with data anonymization or synthetic data generation because of privacy laws and 2) we want to go to market faster, but large environments are holding us back.

The importance of test data obfuscation can be found in the fact that 15% of all bugs that are found are data related. These issues occur by e.g., data quality issues. Masking data helps you to keep these 15% data related issues in your test data set to make sure that these bugs are found and solved before you go to production.

Download paper

“7 tips for choosing the right TDM tool”

High quality test data management can help you save a lot of time and money. However, it can be difficult to choose the right tool. Download this comparison paper with 7 tips for choosing the right test data tool.

The need to go to market faster is a point of discussion at many organizations now. Therefor they are looking at methods like DevOps, Agile and Continuous Testing. But in many cases the technical infrastructure is holding them back. The soft side of agile is ”pretty” easy. But the hard part is the infrastructure. We still all live in a waterfall era. Because your test databases cannot cope with the number of agile teams.

Nowadays it sometimes takes up to 1 or even 2 weeks before a test database is refreshed. In the fast software delivery development of today that should be unacceptable. And to make things even worse, it takes more than 3 persons for the same refreshment. So there is a lot of time wasted in your software delivery process.

TDM software

Being in control of test data is getting more important. With the help of subsetting technology you can deploy smaller sized sets of test data to an environment. These are flexible and sizing is not an issue anymore. With a good test data management platform you can easily give every test team their own (masked) test data set which they can refresh on demand. This approach is not only great for efficiency and performance, but also the strategy that will help your business grow and become industry leading.

The DATPROF software suite consists of several products that allow its customers to realize test data management solutions. The heart of the suite is formed by DATPROF Runtime. This is the test data provisioning platform where execution of DATPROF templates place. In a typical test data management implementation the most frequent used tools are:

- DATPROF Analyze for the purpose of analyzing and profiling a data source;

- DATPROF Privacy for the purpose of modelling masking templates;

- DATPROF Subset for the purpose of modelling subset templates;

- DATPROF Runtime for the purpose of running generated code, templates and the distribution of datasets.

The patented DATPROF suite is designed to minimalize effort (hours) during each stage of the lifecycle. This translates directly into its high implementation speed and ease of use during maintenance.

Data Masking

Mask privacy sensitive data, generate synthetic data and use it for development and testing.

Data Subsetting

Extract small reusable subsets from large complex databases and speed up your testing.

Data Provisioning

Monitor, automate and execute your test data from one central test data portal.

Data Discovery

Get new insights in data quality and find out where privacy sensitive information is stored.

This article was created partly by Jordan MacAvoy, Vice President of Marketing at Reciprocity Labs.